Beyond the Brainstorming Plateau

What AI for Teachers Could Look Like

Introduction

Eighty-four percent of high school students now use generative AI for schoolwork.¹ Teachers can no longer tell whether a competent essay reflects genuine learning or a 30-second ChatGPT prompt. The assessment system they’re operating in, one that measured learning by evaluating outputs, was designed before this technology existed.

Teachers are already trying to redesign curriculum, assessment, and practice for this new reality. But they’re doing it alone, at 9pm, without tools that actually help.

I spent the last few months trying to understand why and what I found wasn’t surprising, exactly, but it was clarifying: the AI products flooding the education market generate worksheets and lesson plans, but that’s not what teachers are struggling with. The hard work is figuring out how to teach when the old assignments can be gamed and the old assessments no longer prove understanding. Current tools don’t touch that problem.

Based on interviews with eight K-12 teachers and analysis of 350+ comments in online teacher communities, I found three patterns that explain why current AI tools fail teachers and what it would take to build ones they’d actually adopt. The answer isn’t more content generation. It’s tools that act as thinking partners where AI helps teachers reason through the redesign work this moment demands.

The Broken Game

For most of educational history, producing the right output served as reasonable evidence that a student understood the material. If a student wrote a coherent essay, they probably knew how to write. If they solved a problem correctly, they probably understood the underlying concepts. The output was a reliable proxy for understanding.

But now that ChatGPT can generate an entire essay in five seconds, that proxy no longer holds. A student who submits a coherent essay might have written it themselves, revised an AI draft, or copied one wholesale. The traditional system can’t tell the difference.

This breaks the game.

Students aren’t cheating in the way we traditionally understand it. They’re using available tools to win a game whose rules no longer make sense. And the asymmetry here matters since students have already changed their behavior. They have AI in their pockets and use it daily. Whether that constitutes “adapting” or merely “gaming the system in new ways” depends on the student. Some are using AI as a crutch that bypasses thinking. Others are genuinely learning to leverage it. Most are somewhere in between, figuring it out without much guidance.

Meanwhile, teachers are still operating the old system: grading essays that might be AI-generated, assigning problem sets that can be solved in seconds, trying to assess understanding through outputs that no longer prove anything. They’re refereeing a broken game without the tools to redesign it.

The response can’t be banning AI or punishing students for using it. Those are losing battles. The response is redesigning the game itself and rethinking what we assess, how we assess it, and how students practice.

Teachers already sense this. In my interviews, they described the same realization again and again that their old assignments don’t work anymore, their old assessments can be gamed, and they’re not sure what to do about it. They are not resistant to change but they are overwhelmed by the scope of what needs to change, and they’re doing it without support.

This matters beyond one industry. While K-12 EdTech is a $20 billion market, and every major AI lab is positioning for it, the real stakes are larger. When knowledge is instantly accessible and outputs are trivially producible, what does it even mean to learn? The companies building educational AI aren’t just selling software, that’s just a means to an end. They’re shaping the answer to that question.

And right now, they’re getting it wrong.

What Teachers Actually Need

The conventional explanation for uneven AI adoption is that teachers resist change. But my research surfaced a different explanation. Teachers aren’t resistant to AI, they’re resistant to AI that doesn’t help them do the actual hard work.

So, what is the ‘hard work’, exactly?

Teacher work falls into two categories. The first is administrative: entering grades, formatting documents, drafting routine communications. The second is the core of the job: designing curriculum, assessing student understanding, providing feedback that changes how students think, diagnosing what a particular student needs next. This second category is how teachers actually teach.

Current AI tools focus almost entirely on the first category. They generate lesson plans, create worksheets, and draft parent emails. Teachers find this useful. It surfaces ideas quickly, especially when colleagues aren’t available to bounce ideas off of. But administrative efficiency isn’t what teachers are struggling with.

The hard problems are pedagogical. How do you redesign an essay assignment when the old prompt can be completed by AI in 30 seconds? How do you assess understanding when AI can produce correct-looking outputs? How do you structure practice so students develop genuine understanding rather than skip the struggle that builds it?

These questions require deep thinking. And most teachers have to work through them alone. They don’t have a curriculum expert available at 9pm when planning tomorrow’s lesson, or a colleague who knows the research, knows their students, and can reason through these tradeoffs with them in real time.

AI could be that thinking partner. That’s the opportunity current tools are missing.

Three Patterns from the Classroom

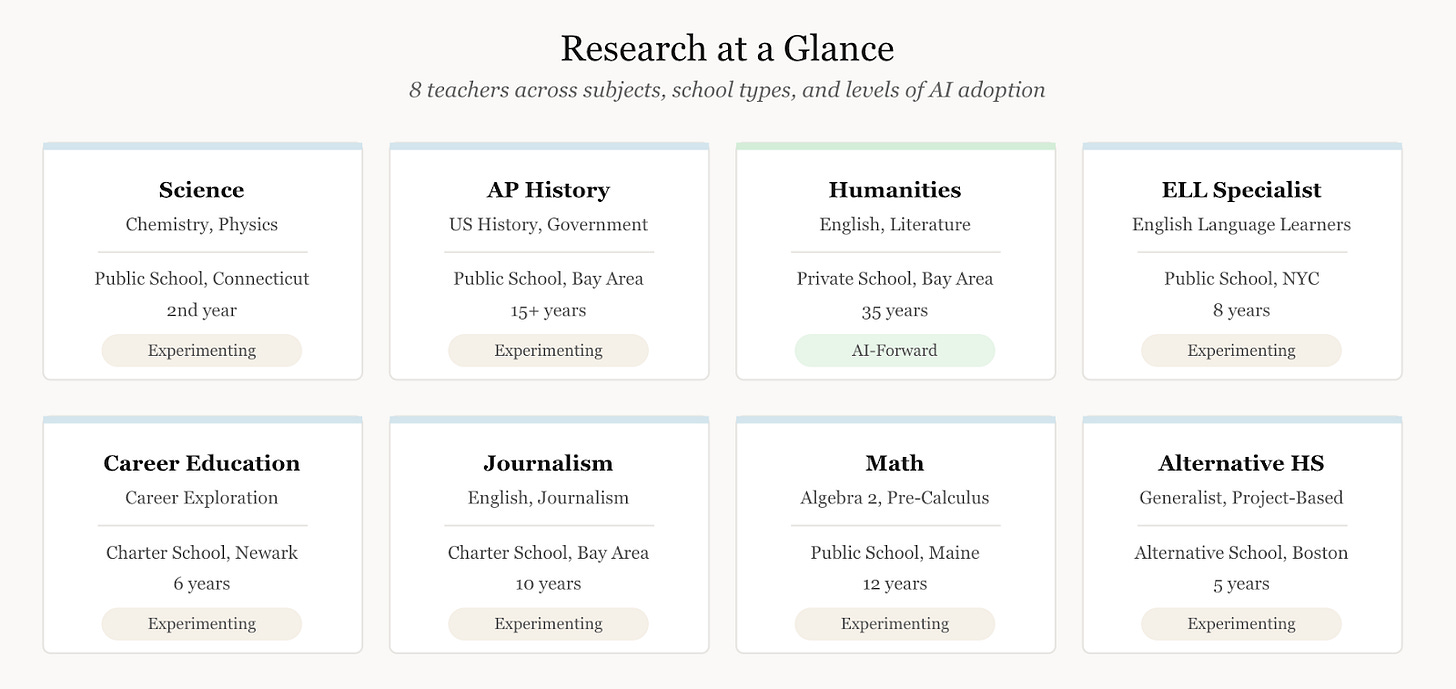

To understand why current tools miss the mark, I interviewed eight K-12 teachers across subjects, school types, and levels of AI adoption. I then analyzed 350+ comments in online teacher communities to test whether their experiences reflected broader patterns or idiosyncratic views.

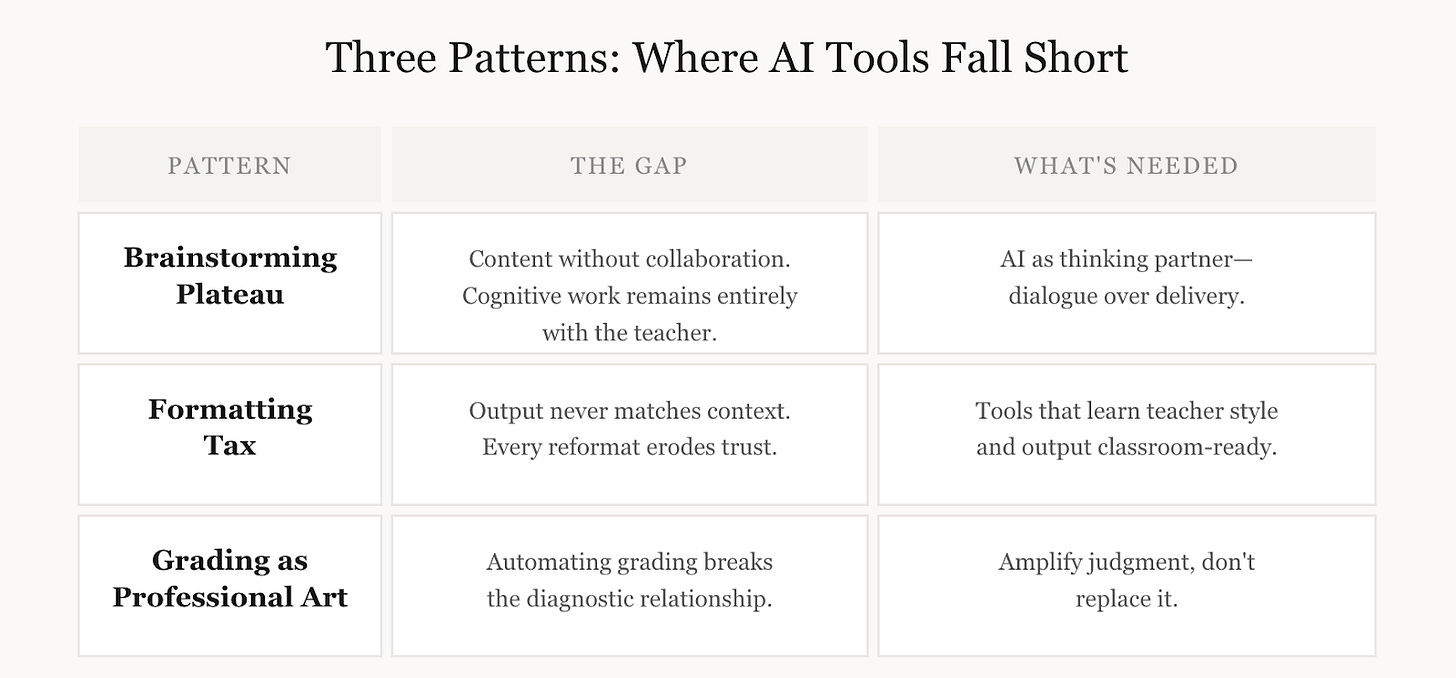

What I found was remarkably consistent. Three patterns kept emerging, and each one reveals a gap between what teachers need and what current AI tools provide.

Pattern 1: The Brainstorming Plateau

Every teacher I interviewed used AI for brainstorming. It’s the universal entry point. “Give me ten practice problems.” “Suggest some activities for this unit.” “What are some real-world connections I could make?” Teachers find this useful because it surfaces ideas quickly when colleagues aren’t available.

But that’s where it stops.

One math teacher described the ceiling bluntly. “Lesson planning, I find to be not very useful. Does it save time or does it not save time? I think it does not save time, because if you are using big chunks, you spend a lot of time going back over them to make sure that they are good, that they don’t have holes.”

He wasn’t alone. This pattern appeared across all eight interviews and echoed throughout the Reddit threads I analyzed. Teachers described the same ceiling again and again. “The worksheets it made for me took longer to fix than what I could have just made from scratch.”

Notice what they’re highlighting is that AI tools produce content that then they have to evaluate alone. They aren’t reasoning through curriculum decisions with AI, they’re cleaning up after it. The cognitive work, the hard part, remains entirely theirs and there is now also incremental work.

A genuine thinking partner would work differently. Not just “here’s a draft assignment” but “I notice your old essay prompt can be completed by AI in 30 seconds. What if we redesigned it so students have to draw on class discussions, articulate their own reasoning, reflect on what they actually understand versus what AI could generate for them? Here are a few options. Which direction fits what you’re trying to teach?”

That’s the kind of collaboration teachers want.

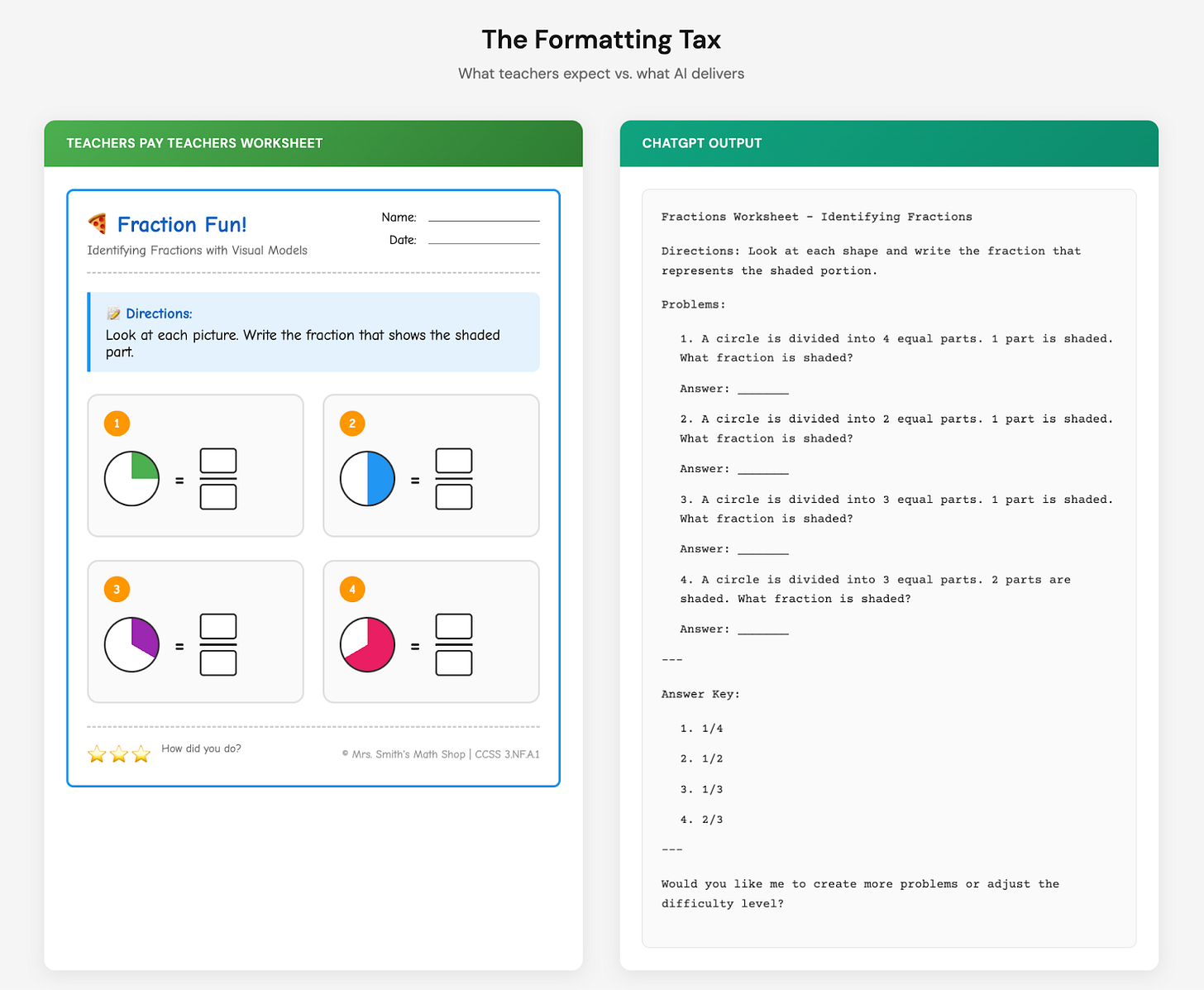

Pattern 2: The Formatting Tax

Even if AI could be that thinking partner, teachers would need to trust it first. And trust is exactly what current tools are squandering.

A recurring frustration across my interviews and Reddit threads is that AI generates content quickly, but the output rarely matches classroom needs. Teachers described spending significant time reformatting, fixing notation that didn’t render correctly, and restructuring content to fit their established norms. One teacher put it simply, “by the time you’ve messed around with prompts and edited the results into something usable, you could’ve just made it yourself.”

This might seem minor, a ‘fit-and-finish’ problem, but it highlights a broader trust problem.

Every time a teacher reformats AI output, they are reminded that this tool doesn’t know my classroom. It doesn’t understand my context and it produces generic content, expecting me to do the translation.

And this points to something deeper. Teachers know that these tools weren’t designed for classrooms. They were built for enterprise use cases and retrofitted for education, optimizing for impressive demos to district leaders rather than daily classroom use. Teachers haven’t been treated as partners in shaping what AI-augmented teaching should look like.

A tool that understood context would work differently. Not “here’s a worksheet on fractions” but “here’s a worksheet formatted the way you like, with the name field in the top right corner, more white space for showing work, vocabulary adjusted for your English language learners. I noticed your last unit emphasized visual models, so I’ve included those. Want me to adjust the difficulty progression?”

That’s what earning trust looks like.

Pattern 3: Grading as Diagnostic Work

And even then, trust is only part of the story. Even well-designed tools will fail if they try to automate the wrong things.

When it comes to grading, teachers are willing to experiment with AI for low-stakes feedback (exit tickets, rough drafts, etc.). But when they do, the results disappoint. “The feedback was just not accurate,” one teacher told me. “It wasn’t giving a bad signal, but it wasn’t giving them the things to focus on either.”

For teachers, the problem is that the AI feedback is just too generic.

One English teacher shared why this matters, “I know who could do what. Some students, if they get to be proficient, that’s amazing. Others need to push further. That’s dependent on their starting point, not something I see as a negative.”

This is why teachers resist automating grading even when they’re exhausted by it. Grading isn’t just about assigning scores, it’s diagnostic work. When a teacher reads student work, they’re asking themselves: what does this student understand? What did I do or not do that contributed to this outcome? How does this represent growth or regression for this particular student? Grading is how teachers know what to do tomorrow for their students.

A tool that understood this would work differently. Not “here’s a grade and some feedback” but “I noticed three students made the same error on question 4. They’re confusing correlation with causation. Here’s a mini-lesson that addresses that misconception. Also, Marcus showed significant improvement on thesis statements compared to last month. And Priya’s response suggests she might be ready for more challenging material. What would you like to prioritize?”

That’s a thinking partner that helps teachers see patterns so they can make better decisions. The resistance to AI grading isn’t technophobia so much as an intuition that the diagnostic work is too important to outsource.

The Equity Dimension

Now, these patterns don’t affect all students equally… and this is the part that keeps me up at night.

Some students will figure out how to use AI productively. They have adults at home who can guide them, or the metacognitive skills to self-regulate. But many won’t. Research already shows that first-generation college students are less confident in appropriate AI use cases than their continuing-generation peers.² The gap is already forming.

This is an equity problem hiding in plain sight. When AI tools fail teachers, they fail all students, but they fail some students more than others. The students who most need guidance on how to use AI productively are least likely to get it outside of school.

Teachers are the only scalable intervention, but only if they can see what’s happening: which students are over-relying on AI as a crutch, which are underutilizing it, and which are developing genuine capability. Without tools that surface these patterns, the redesign helps the students who were already going to be fine.

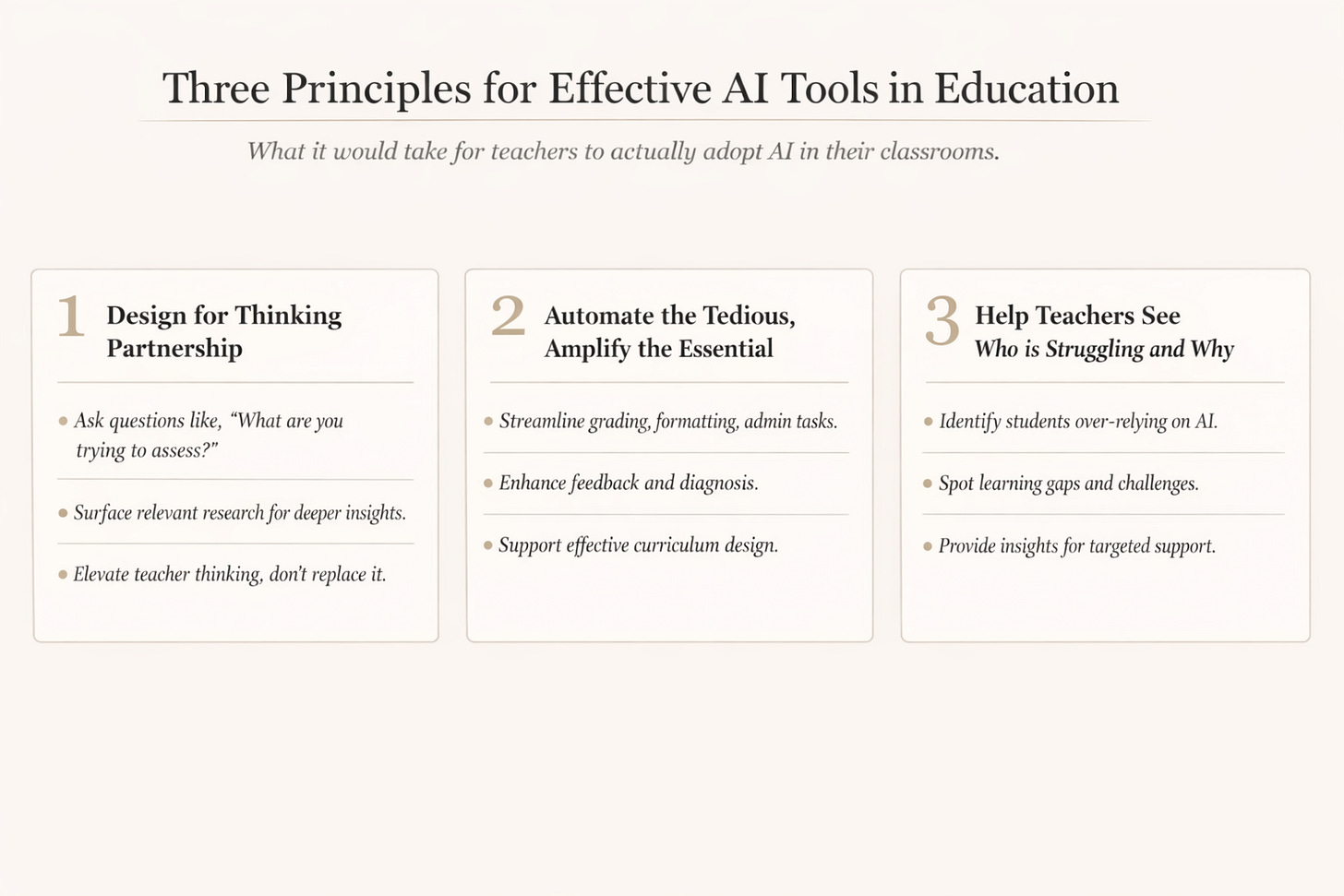

What Would Actually Work

These three patterns reinforce the gap that tools are currently designed to generate content when teachers need help thinking through problems. Years of context-blind, teacher-replacing products have eroded the trust in this critical population.

So what would it actually take to build tools teachers adopt? Three principles emerge from my research.

Design for thinking partnership, not content generation. The measure of success isn’t content volume. It’s whether teachers make better decisions. That means tools that engage in dialogue rather than just produce drafts. Tools that ask “what are you trying to assess?” before generating an assignment. Tools that surface relevant research when teachers are wrestling with hard questions. The goal is elevating teacher thinking, not replacing it.

Automate the tedious, amplify the essential. Teachers want some tasks done faster such as entering grades, formatting documents, drafting routine communications. Other tasks they want to do better: diagnosing understanding, designing curriculum, providing feedback that changes how students think. The first category is ripe for automation. The second requires amplification where AI enhances teacher capability rather than substituting for it. “AI can surface patterns across student work” opens doors that “AI can grade your essays” closes.

Help teachers see who is struggling and why. Build tools that surface patterns on which students show signs of skipping the thinking, which show gaps that AI is papering over, which are developing genuine capability. This is the diagnostic information teachers need to differentiate instruction and ensure the students who need the most support actually get it.

The Broader Challenge

This is a preview of a challenge that will confront every domain where AI meets skilled human work.

The question of whether AI should replace human judgment or augment it isn’t abstract. It gets answered, concretely, in every product decision. Do you build a tool that grades essays, or one that helps teachers understand what students are struggling with? Do you build a tool that generates lesson plans, or one that helps teachers reason through pedagogical tradeoffs?

The first approach is easier to demo and easier to sell. The second is harder to build but more likely to actually work, and more likely to be adopted by the people who matter.

Education is a particularly revealing test case because the stakes are legible. When AI replaces teacher judgment in ways that don’t work, students suffer visibly. But the same dynamic plays out in medicine, law, management, and every domain where expertise involves judgment, not just information retrieval.

The companies that figure out how to build AI that genuinely augments expert judgment, rather than producing impressive demos that experts eventually abandon, will have learned something transferable. And very, very important.

Conclusion

Students are developing their relationship with AI right now, largely without deliberate guidance. They’re playing a broken game, and they know it. Whether they learn to use AI as a crutch that bypasses thinking or as a tool that augments it depends on whether teachers can redesign the game for this new reality.

That won’t happen with better worksheets. It requires tools designed with teachers, not for them. Tools that treat teachers as collaborators in figuring out what AI-augmented education should look like, rather than as end-users to be sold to.

What would that look like in practice? A tool that asks teachers what they’re struggling with before offering solutions. A tool that remembers their classroom context, their students, their constraints. A tool that surfaces research and options rather than just producing content. A tool that helps them see patterns in student work they might have missed. A tool that makes the hard work of teaching, the judgment, the diagnosis, the redesign, a little less lonely.

The teachers I interviewed are ready to do this work and they’re not waiting for permission.

They’re waiting for tools worthy of the challenge.

Research Methodology

This essay draws on semi-structured interviews with eight K-12 teachers across different subjects (math, English, science, history, journalism, career education), school types (public, charter, private), and levels of AI adoption. All quotes are anonymized to protect participant privacy.

To test generalizability, I analyzed 350+ comments in online teacher communities, particularly Reddit’s r/Teachers. I intentionally selected high-engagement threads representing both enthusiasm and skepticism about AI tools. A limitation worth noting is that high-engagement threads tend to surface the most articulate and polarized voices, which may not represent typical teacher experiences. Still, the consistency between interview data and online discourse, despite different geographic contexts and school types, suggests these patterns reflect meaningful dynamics in K-12 AI adoption.

—

¹ College Board Research, “U.S. High School Students’ Use of Generative Artificial Intelligence,” June 2025. The study found 84% of high school students use GenAI tools for schoolwork as of May 2025, with 69% specifically using ChatGPT.

² Inside Higher Ed Student Voice Survey, August 2025.

—

About the Author: Akanksha has over a decade of experience in education technology, including classroom teaching through Teach for America, school leadership roles at Charter Schools, curriculum design and operations leadership at Juni Learning, and AI strategy work at Multiverse, where she led efforts to scale learning delivery models from 1:30 to 1:300+ ratios while maintaining quality outcomes.